June 28, 2018

In Army of None, a field guide to the coming world of autonomous warfare

Source: TechCrunch

Journalist Danny Crichton

The Silicon Valley-military industrial complex is increasingly in the crosshairs of artificial intelligence engineers. A few weeks ago, Google was reported to be backing out of a Pentagon contract around Project Maven, which would use image recognition to automatically evaluate photos. Earlier this year, AI researchers around the world joined petitions calling for a boycott of any research that could be used in autonomous warfare.

For Paul Scharre, though, such petitions barely touch the deep complexity, nuance, and ambiguity that will make evaluating autonomous weapons a major concern for defense planners this century. In Army of None, Scharre argues that the challenges around just the definitions of these machines will take enormous effort to work out between nations, let alone handling their effects. It’s a sobering, thoughtful, if at times protracted look at this critical topic.

Scharre should know. A former Army Ranger, he joined the Pentagon working in the Office of Secretary of Defense, where he developed some of the Defense Department’s first policies around autonomy. Leaving in 2013, he joined the DC-based think tank Center for a New American Security, where he directs a center on technology and national security. In short, he has spent about a decade on this emerging tech, and his expertise clearly shows throughout the book.

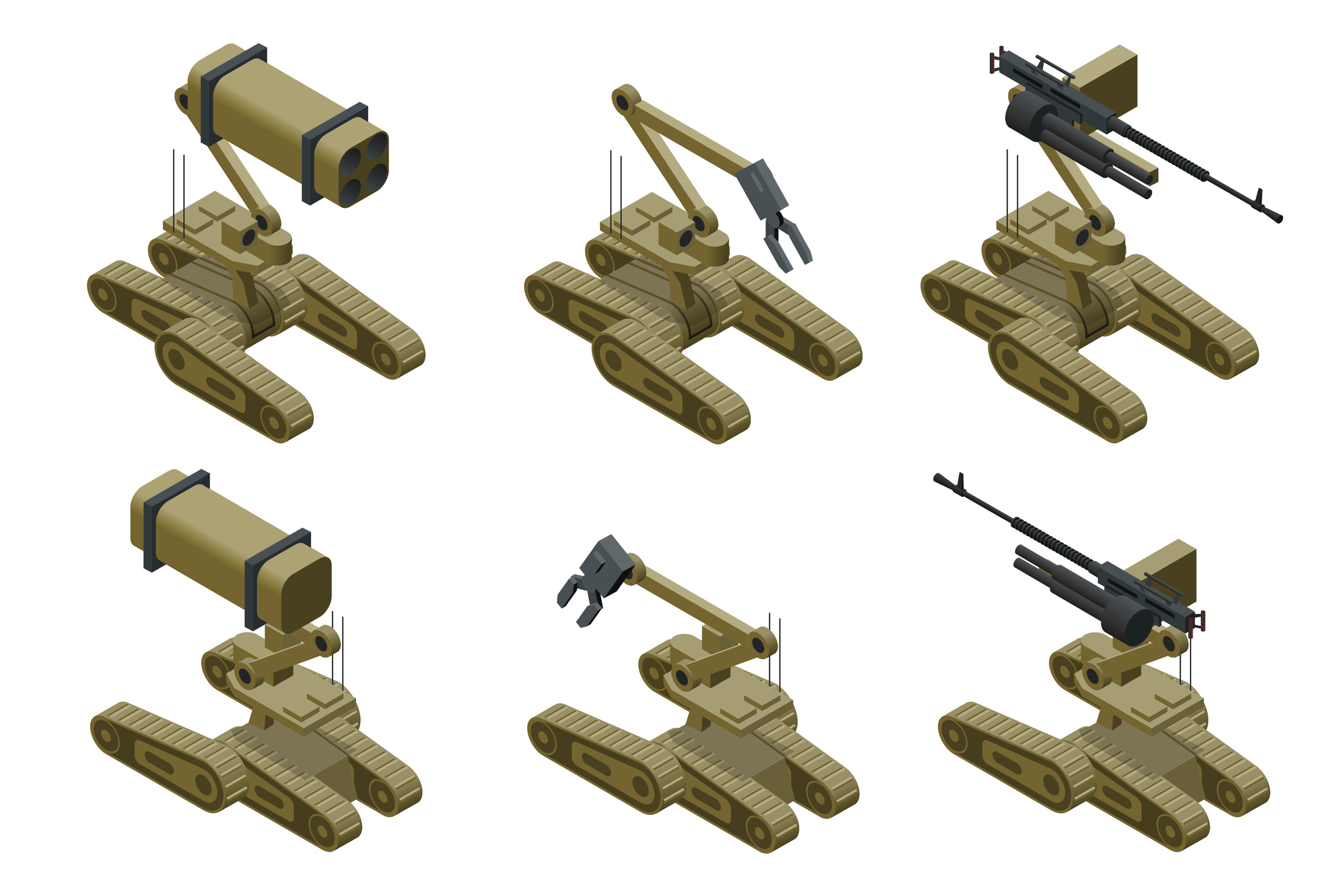

The first challenge that belies these petitions on autonomous weapons is that these systems already exist, and are already deployed in the field. Technologies like the Aegis Combat System, High-speed Anti-Radiation Missile (HARM), and the Harpy already include sophisticated autonomous features. As Scharre writes, “The human launching the Harpy decides to destroy any enemy radars within a general area in space and time, but the Harpy itself chooses the specific radar it destroys.” The weapon can loiter for 2.5 hours while it determines a target with its sensors — is it autonomous?

Scharre repeatedly uses the military’s OODA loop (for observe, orient, decide, and act) as a framework to determine the level of autonomy for a given machine. Humans can be “in the loop,” where they determine the actions of the machine, “on the loop” where they have control but the machine is mostly working independently, and “out of the loop” when machines are entirely independent of human decision-making.

Read the Full Review at TechCrunch